Trust your gut" isn't mysticism—it's a value function trained on years of real outcomes. AI can't replicate it because current training uses short-term feedback on curated datasets, not the messy, long-horizon reality that shapes human judgment.

Category: AI

Strategy in the Age of Infinite Slop

AI won't replace strategy consultants because corporate decisions yield sparse, delayed feedback with no A/B test. While LLMs generate options brilliantly, only humans can judge which will survive contact with reality years later.

The New Computer in the Clinic

Healthcare is moving from "point-and-click" EHRs to "intent and oversight." LLMs can finally read clinical narratives, but they need deterministic guardrails to convert physician storytelling into safe, structured actions patients can trust.

AI and the Prepared Mind: Engineering Luck in Drug Discovery

AI's "Logic Engine" designs perfect molecules in silico, but most still fail in Phase 2. The gap isn't chemistry—it's understanding human biology. Dr. Eng's GLP-1 discovery came from embodied clinical context AI can't replicate yet.

How AI Gets Paid Is How It Scales

AI agents will scale in healthcare when they create a labor dividend—either eliminating admin overhead or letting each scarce clinician produce more. Reimbursement models must shift from human minutes to AI-driven outcomes.

When AI Meets Aggregation Theory in Healthcare

Epic isn't a true platform—it doesn't pass Bill Gates's test where ecosystem value exceeds the company's own. With IAS rails and consumer AI assistants, healthcare's first real aggregator could finally own demand, not just supply.

America’s Patchwork of Laws Could Be AI’s Biggest Barrier in Care

State AI rules are regulating software like a risky human, creating 50 versions of compliance. A federal framework could separate assistive from autonomous agents, letting FDA handle high-risk use while safe harbors accelerate adoption.

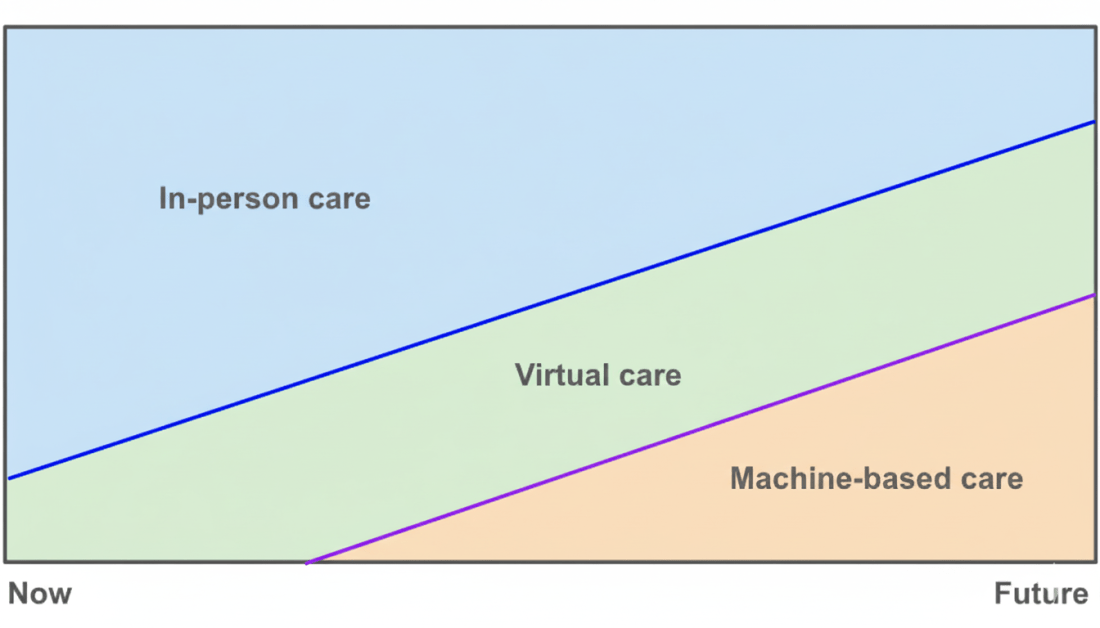

The Gameboard for AI in Healthcare

Healthcare AI is stuck in "middle to middle"—helping clinicians draft and summarize, but not prescribing. Level-5 autonomy requires moving from probabilistic language to deterministic actions with verifiable plans and safety gates.

GPT-5 vs Grok4, No Health AI Champion Yet

GPT-5 is more cautious than Grok4 with ambiguous inputs, but both fail anatomy vetoes and physics checks. Progress, not readiness. Until models enforce unit verification and constraint gates, they're promising tools, not dependable partners.

AI Can’t “Cure All Diseases” Until It Beats Phase 2

AI-designed molecules are moving fast, but 70% still die in Phase 2. Population genetics gave us PCSK9, but it's too slow. Live-biology platforms that test drugs on evolving patient tissue could finally crack the clinical proof-of-concept bottleneck.